This article explains how the three binding options -bsort, -bstart, and -bstop control where and in which order jobs are bound to CPUs in Gridware Cluster Scheduler.

Together, they define the scheduler’s fill-up pattern — how hardware resources are used, balanced, or reused across sockets, cores, and NUMA nodes.

Understanding these options is essential for creating advanced scheduling strategies such as energy-aware, thermal, or cost-optimized job placement.

Further Reading

If you missed the first two parts of this series, start there — they introduce the concepts of topology strings and binding basics that this article builds on:

Why order and range matter

Imagine your cluster as a row of houses, each with several rooms. Jobs are like guests arriving to stay overnight.

Without a plan, guests will pick rooms at random. Some might share walls and benefit from proximity; others might end up in different houses entirely.

Binding order and range options give the scheduler a plan — a way to decide how the “houses” and “rooms” are filled:

-bsortdefines which house or room type to consider first.-bstartsets where the first guest can enter.-bstopdefines where the group must stop filling.

It’s a simple idea — but it’s the foundation for consistent, balanced, and sometimes energy-efficient scheduling. This ensures that jobs don’t overlap or scatter unpredictably — they follow a defined parking pattern.

1. Sorting with -bsort

The -bsort option controls the order in which available CPU units are considered. It uses the same letters as topology strings (S, C, E, N, X, Y) but interprets them by utilization:

- Uppercase letters (

S,C,N…) mean start with free resources. - Lowercase letters (

s,c,n…) mean start with already-used resources.

This simple mechanism lets administrators describe sophisticated fill-up patterns:

-bsort "SC"— Fill unutilized sockets and cores first.-bsort "sC"— Reuse partially filled sockets before opening a new one.-bsort "nSyC"— (GCS only) Use utilized NUMA nodes and 3rd Level Caches before starting opening new ones but empty sockets and cores are preferred within NUMA nodes and core groups.

If no sort order is defined, the scheduler uses the hardware’s natural order as reported by HWLOC. This order is the default behavior in most competitive workload management systems and ensures predictable placement when the WLM lacks a possibility to specify preferences.

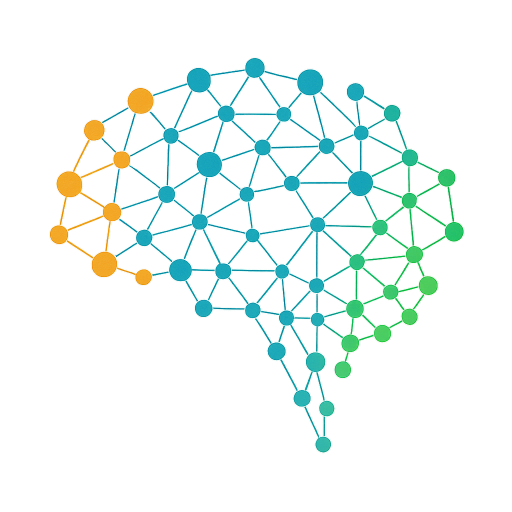

2. Defining the range with -bstart and -bstop

After sorting, the scheduler knows which binding units exist and in what order they should be considered. The next question is: how much of that ordered list should be used? That’s what -bstart and -bstop control.

You can think of the sorted topology as a long sequence of nodes — a string that lists sockets, cores, or cache domains in the order defined by -bsort.

With -bstart and -bstop, you mark a window inside that string to show where binding is allowed.

Start here → [================] ← Stop hereThe brackets represent the usable part of the topology. Binding can happen only inside this bracketed region; everything outside it is ignored. This is especially useful when you want to reserve or exclude certain parts of a host — for example, to keep the first socket free for system services or to group jobs within a specific NUMA region.

Consider a dual-socket, quad-core host represented as:

SCCccSCCCC(lowercase c marks partially utilized cores)

-bstart S -bstop s→ the scheduler begins binding at the first unutilized socket (S) and stops before the first partially used socket (s), effectively limiting the job to the clean half of the machine. (SCCcc[SCCCC])-bstart s -bstop S→ Reversing the range starts binding at the first used socket (s) and continues until the next free socket (S) ([SCCcc]SCCCC)

You can imagine this as managing a parking lot: -bsort arranges the parking spaces by preference, and -bstart/-bstop draw the lines around the section that is currently open for parking. Jobs will always stay within that section, preventing overlap and ensuring predictable placement.

3. Real-world significance

At first glance, -bsort, -bstart, and -bstop may seem like low-level tweaks. In reality, they are the building blocks of enterprise scheduling strategy.

These options influence how load, heat, and power consumption are distributed across hardware, and they provide the basis for higher-level policies such as:

Energy-Aware Scheduling – fill one socket or die before activating another.

Thermal & Power-Density Balancing – distribute workloads evenly across nodes.

License- or Cost-Aware Placement – schedule on specific CPU’s first.

Cache & Memory-Bandwidth Optimization – keep related processes close to shared caches or NUMA regions.

In GCS, JSV (Job Submission Verifier) scripts can dynamically adjust these settings to enforce global policies across the cluster.

4. Looking ahead

This post closes the “mechanics” phase of the binding series. In the next article, we’ll apply everything we’ve learned to real enterprise use cases — combining sorting, filtering, and range selection to implement:

- Energy-optimized cluster configurations

- Thermal-balanced compute fabrics

- Cost- and license-aware job scheduling

What seems like three small options today will soon become the core tools for advanced resource optimization.